Evaluating Consistency of Ratings: Understanding and Applying Cohen’s Kappa Metric

ASCERTAINING CONSISTENCY OF RATINGS – Cohen’s Kappa Metric

Many activities in our day to day work involve some kind of evaluation or judgment. When this is based on quantitative measurement and criteria, we will not be surprised if different evaluators give the same rating.

But many evaluations, even if based on-well defined and well understood criteria, are subjective and at the discretion of the evaluator. In such cases, how can we have confidence that different people give similar ratings? How do we know that the rating is not dependent on the luck of the draw, based on who did the evaluation?

Cohen’s Kappa

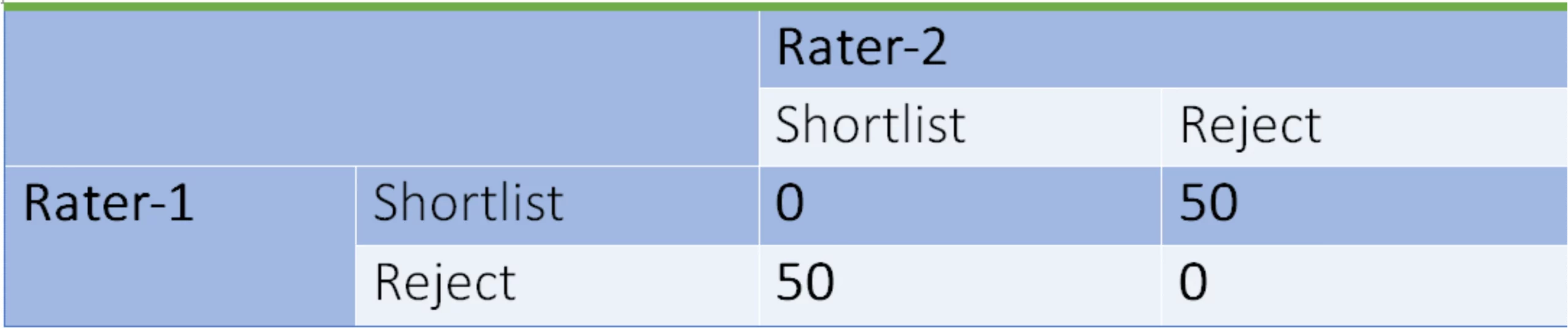

Let there be two recruiters R1 and R2, deciding on whether or not to shortlist resumes for a particular open job position. If each recruiter evaluates the same 100 resumes independent of the other, we can use the data from their decision to find how consistent the decision is, using Cohen’s Kappa metric. Suppose we have the following result.

Suppose one or both the raters, instead of their knowledge and experience, used a purely random process, like rolling a dice or tossing a coin, to decide whether to shortlist or reject a resume. Even here, we could expect to find them both agreeing in at least some of the cases, purely by chance.

Calculating Cohen’s Kappa

Step-1

We find N1, the ratio of observations where the raters agree, to the total number of observations. In our example, we have

N1 = 30 + 56) / (100)

This works out to 0.86, as we have already seen above.

Step-2

We now have to find the ratio of observations where the agreement can be attributed to chance. For this, we find N2, the ratio of observations where both raters shortlisted a resume based on random factors. It is found by calculating the probability of R1 shortlisting a resume, multiplied by the probability of R2 shortlisting a resume. So

N2 = ((30 + 9) / (100)) * ((30 + 5) / (100))

This is because R1 shortlisted (30 + 9) resumes out of 100, while R2 shortlisted (30 + 5) out of 100. Thus the probability of R1 shortlisting a resume is 0.39, while that of R2 shortlisting a resume is 0.35.

Therefore the probability of both raters shortlisting a resume by pure chance is (0.39 * 0.35) = 0.1365.

Step-3

We next find the ratio of observations N3 where both raters rejected a resume based on chance. Using the same method as in Step-2 above,

N3 = ((56 + 5) / (100)) * ((56 + 9) / (100))

The value of N3 is thus (0.61 * 0.65) = 0.3965. This is the probability that both raters reject a resume by pure chance.

Step-4

From N2 and N3, we can find N4, the probability that the raters will agree on a resume by pure chance.

N4 = N2 + N3

N4 = 0.1365 + 0.3965

N4 = 0.533

Step-5

Now it only remains to find the value of Cohen’s Kappa. It is defined as

K = (N1 – N4) / (1 – N4)

K = (0.86 – 0.533) / (1 – 0.533)

K = (0.327 / 0.467)

K = 0.70

Interpreting Cohen’s Kappa

Applicability

This metric removes the element of chance agreement between the raters and gives a more reliable number to measure consistency between them.

The metric does not and cannot evaluate the validity of the criteria used! In our example, if the raters started using the physical appearance of the candidates as a criterion for shortlisting their resume, we may still get a good Cohen’s Kappa measure, but it would still be invalid and worthless as far as the quality of the actual shortlist goes. So the metric only tells us how consistent the raters are, and nothing more.

This metric can be used to evaluate a software system. If we used some resume shortlisting software, and called it R1, and also had a human rater evaluate the same resumes as R2, the Cohen’s Kappa could tell us how good the software was in shortlisting the resumes. We could then take a decision on whether to shortlist all resumes automatically using the software, and dispense with human evaluation.