Why Kubernetes Is Now Key to AI and ML Success

Kubernetes is no longer just about containers. It’s fast becoming the backbone for running AI and ML workloads at scale. Why? It’s flexible, supports GPU hardware, and connects smoothly with tools like Kubeflow and KServe that are built for machine learning. This makes it perfect for companies looking to run real AI in production.

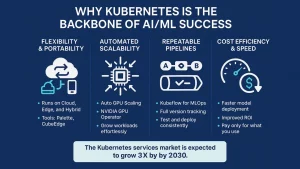

What Makes Kubernetes So Good for AI?

-

Auto GPU Scaling:

Tools like NVIDIA’s GPU Operator let Kubernetes manage GPUs automatically, so your AI workloads can grow without effort. Source: Portworx

-

Repeatable ML Pipelines:

Kubeflow helps teams create, test, and run machine learning steps over and over again, with full version tracking. Source: Wikipedia

-

Runs Anywhere:

Kubernetes works on the cloud, at the edge, and in hybrid setups. Tools like Palette and CubeEdge help manage this. Source: SpectroCloud

Market Growth: AI and Kubernetes Powering Cloud Innovation

The need for AI and ML on Kubernetes is exploding. More businesses are turning to managed Kubernetes services, especially those on cloud platforms like AWS, to run their AI apps efficiently and at low cost.

- Experts expect the Kubernetes services market to grow 3X by 2030. Source: Markets n Research

- AI-as-a-Service is now a major reason companies are spending more on cloud. Source: SpectroCloud

- Kubernetes makes it cheaper and faster for data teams to get results. Source: Wikipedia

- Containerizing ML helps companies launch models faster and improve ROI. Source: Adyog

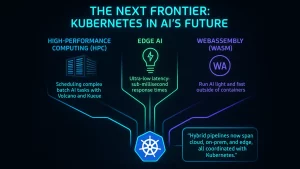

What’s Next? HPC, Edge AI, and WASM

Kubernetes is entering new spaces like high-performance computing (HPC), edge AI, and WebAssembly (WASM). These trends are reshaping how AI gets built and deployed.

- Projects like Volcano and Kueue now help schedule complex AI tasks in batches. Source: InfraCloud

- AI at the edge is now super fast—some workloads respond in under a millisecond. Source: SpectroCloud

- WASM lets AI run light and fast outside containers, perfect for edge devices. Source: ResearchGate

- Hybrid pipelines now span cloud, on-prem, and edge, all coordinated with Kubernetes. Source: Cloud4C

Best Practices in 2025 for AI on Kubernetes

Companies using Kubernetes for AI and ML are focusing on smart strategies to save money, improve performance, and stay compliant.

- Use autoscalers, node affinity, and GPU quotas to manage costs. Source: SpectroCloud

- Run CI/CD pipelines using Kubeflow, Katib, and model version control. Source: Azumo

- Combine zero-trust security with OpenTelemetry to monitor everything. Source: Treebeard

- Use tools like Azure AKS Fleet Manager for hybrid cloud and multi-cluster setups. Source: Adyog

- Run stateful ML tools (like model and feature stores) directly on Kubernetes. Source: Red Hat

How Impressico Boosts Your AI Projects with Kubernetes

At Impressico, we make Kubernetes-as-a-Service easy and powerful for AI and ML. Our experts help you plan, build, and manage full-stack solutions using the best of managed Kubernetes, AI tools, and cloud infrastructure.

Here’s what we offer:

- Tailored AI and Kubernetes plans that align with your business goals.

- Full cluster setup with GPU support and model serving tools.

- End-to-end ML pipelines with auto deployment, monitoring, and rollback.

- Secure and cost-effective infrastructure across multiple environments.

- Edge and hybrid deployments for real-time applications.

Want to Build Smarter AI Systems with Kubernetes?

Let Impressico Business Solutions be your guide. We’re here to help you get the most out of AI and ML on Kubernetes—whether it’s on AWS managed Kubernetes, hybrid cloud, or edge.

Book your free consultation today and start building the future of AI.

Kubernetes for AI and ML: Frequently Asked Questions

-

How does Kubernetes enhance ROI on AI/ML projects?

Kubernetes allows you to get more out of less. It makes better use of your computing power, so you waste less and spend less. It also accelerates the time it takes to test and deploy models. It translates to quicker results and more value from your AI efforts.

-

What are the cost-saving advantages of employing Kubernetes for AI over conventional cloud VMs?

The classic cloud VMs tend to remain in operation even when idle. With Kubernetes, you consume what you require, when you need it. It automatically scales up and down. This saves us a significant amount of money, particularly with GPU-intensive AI applications.

-

How does Kubernetes enable us to deploy AI models more quickly so that we can get a competitive advantage?

Kubernetes automates a lot of steps. It makes it easy for teams to deploy, test, and release AI models in a hurry. Changes can go live quickly using built-in tools without any manual labor. This provides your business with a huge advantage over others.

-

What are the risks of running AI/ML on Kubernetes, and how can we mitigate them?

Some of these risks are poor installation, inadequate monitoring, or security risks. But these can be rectified by employing good tools, frequent updates, and security best practices. Employing managed Kubernetes services also mitigates risks.

-

Can Kubernetes handle small-scale experiments and large-scale AI production deployments?

Yes, it can support both. Start small with a couple of machines, and expand later on. Kubernetes scales with your requirements, so you can utilize it in early testing or production without having to switch platforms.

-

How does Kubernetes provide high availability and reliability for mission-critical AI workloads?

Kubernetes executes your apps on lots of machines. When one fails, the others continue operating. It also monitors your AI services and relaunches them if something goes wrong. This maintains your AI in continuous operation with minimal downtime.

-

What kind of business use cases see the biggest success with Kubernetes-powered AI?

Big wins are achieved in such areas as fraud detection, demand forecasting, targeted advertising, and real-time recommendations. Any company requiring immediate insights from data or real-time AI gains significantly from implementing Kubernetes.